Data migration from CouchDb

To Migrate data from couchDB

- Download the tool couch2pg. You can use the below command to download

git clone https://github.com/medic/medic-couch2pg.git

- Create a postgres database that will act as the staging database for migration to OpenSRP Postgres database

- Change directory to

medic-couch2pg - Execute the below on the terminal to start the couch2pg tool. Fill the correct params in the placeholders

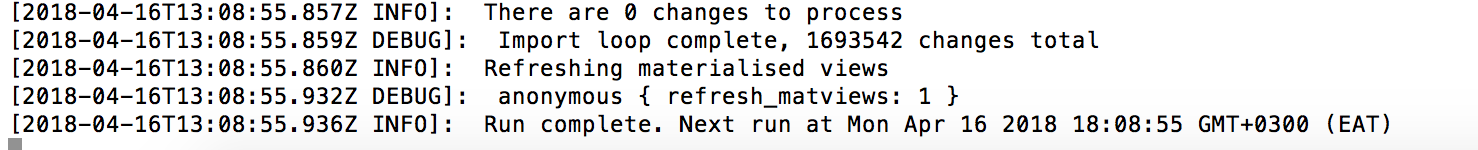

export POSTGRESQL_URL=postgresql://<postgres_username>:<postgres_password>@localhost:5432/<postgres_staging_databaseexport COUCHDB_URL=http://<couch_username>:<couch_password>@localhost:5984/<couch_database>export COUCH2PG_SLEEP_MINS=120export COUCH2PG_DOC_LIMIT=1000export COUCH2PG_RETRY_COUNT=5node index.js - Wait after all the data has been uploaded on the postgres staging database. You should see "Run complete" on the terminal after the data import into postgres is completed. Example of output when tool finishes migrating data is illustrated below

- copy the table couchdb table from the staging postgres database above to the opensrp database. Use the command

pg_dump -tcouchdb<postgres_staging_database>| psql <opensrp_database> Execute the scripts in the folder data_migration_scripts folder. Execute while connected to the postgres database

opensrp_databaseopensrp/assets/migrations/data_migration_scripts- Delete the table couchdb in the OpenSRP database. You can use the below

drop table couchdb

Migrating Very Large databases

The data_migration_scripts use insert select statements to migrate data from couchdb staging table to the OpenSRP database relevant table(s). Each script selects all document types of a particular domain e.g Clients then inserts into the OpenSRP database table.

This may not work in very large databases with tens of millions of records for each document type because of memory overhead. For this scenario you may have to use postgres database cursors.

However insert select was tested with 5.6 milliion actions records and was completing in 12 minutes to import data from couchdb staging table to OpenSRP database tables action and action_metadata.

Below is an actions migrations example of using cursors to perform migration and can be extended to support all domain objects if required by adapting the data_migration_scripts to use cursors.

DO $$

DECLARE

DECLARE couchdb_cursor CURSOR FOR SELECT * FROM couchdb where doc->>'type'='Action';

DECLARE actions_cursor CURSOR FOR SELECT * FROM core.action;

t_action RECORD;

BEGIN

OPEN couchdb_cursor;

LOOP

FETCH couchdb_cursor into t_action;

EXIT WHEN NOT FOUND;

INSERT INTO core.action(json) VALUES (t_action.doc);

END LOOP;

CLOSE couchdb_cursor;

OPEN actions_cursor;

LOOP

FETCH actions_cursor INTO t_action;

EXIT WHEN NOT FOUND;

INSERT INTO core.action_metadata(action_id, document_id, base_entity_id,server_version, provider_id,location_id,team,team_id)

VALUES (t_action.id ,t_action.json->>'_id',t_action.json->>'baseEntityId',(t_action.json->>'timeStamp')::BIGINT ,

t_action.json->>'providerId',t_action.json->>'locationId',t_action.json->>'team',t_action.json->>'teamId');

END LOOP;

CLOSE actions_cursor;

END$$;